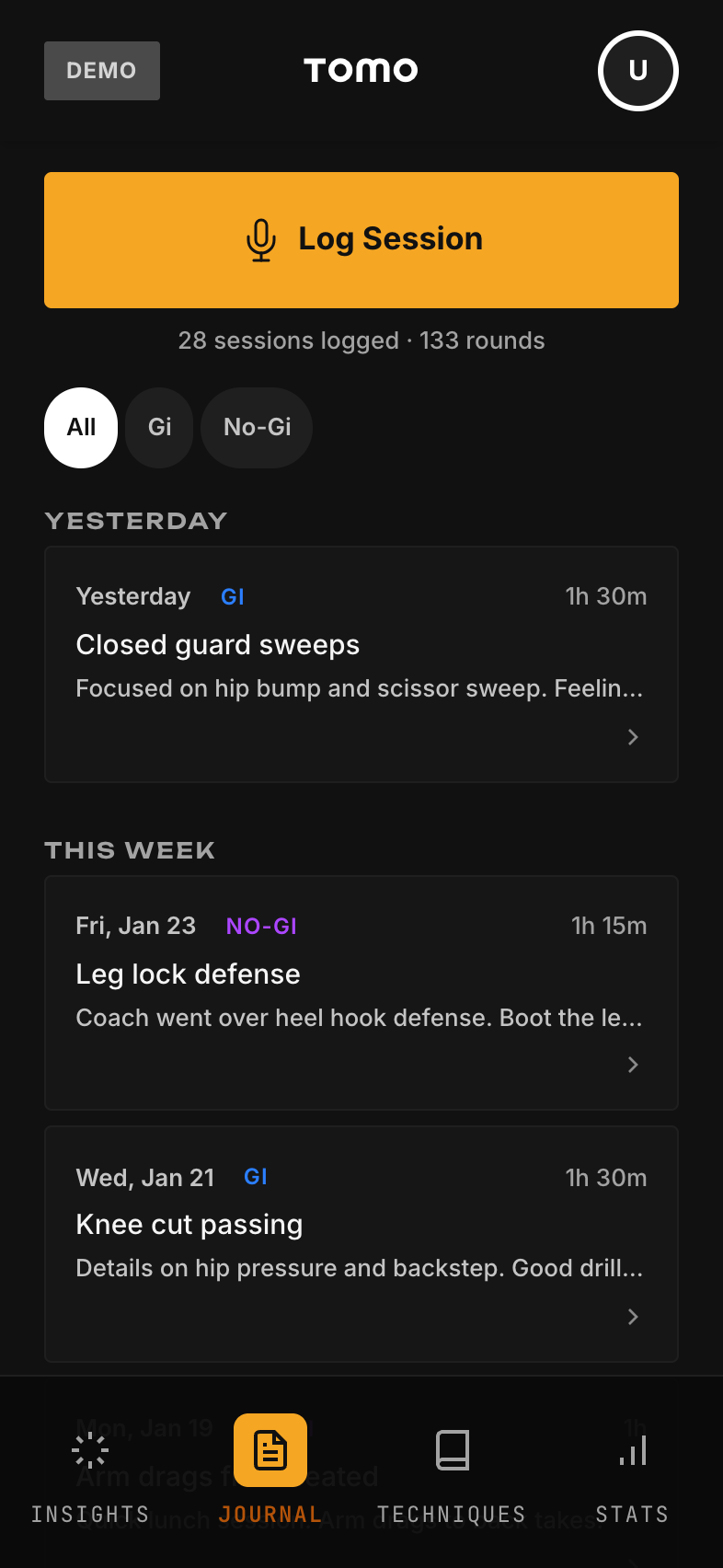

Cross-Cutting Systems

These aren't pages—they're systems that work ACROSS all pages. Your developer needs to understand these because they affect how many features work.

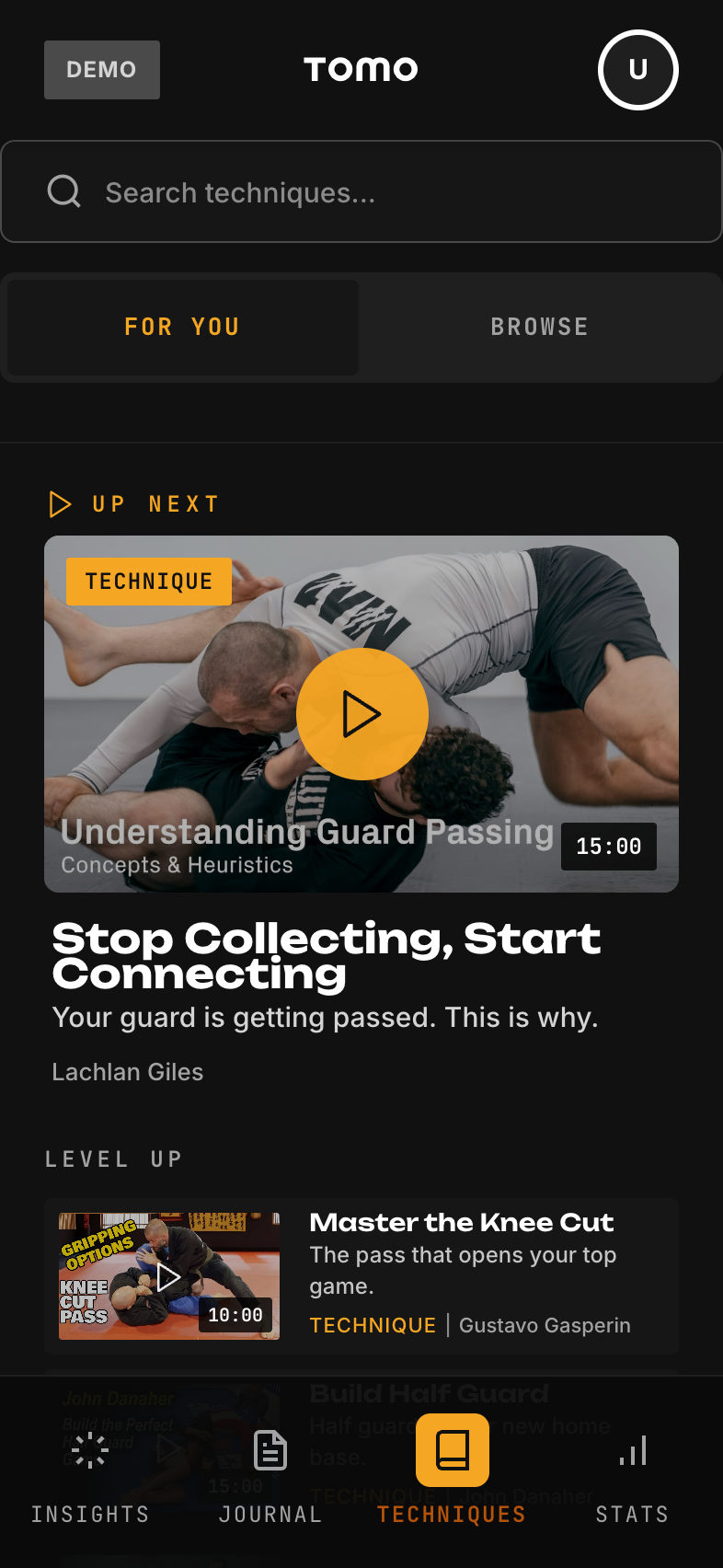

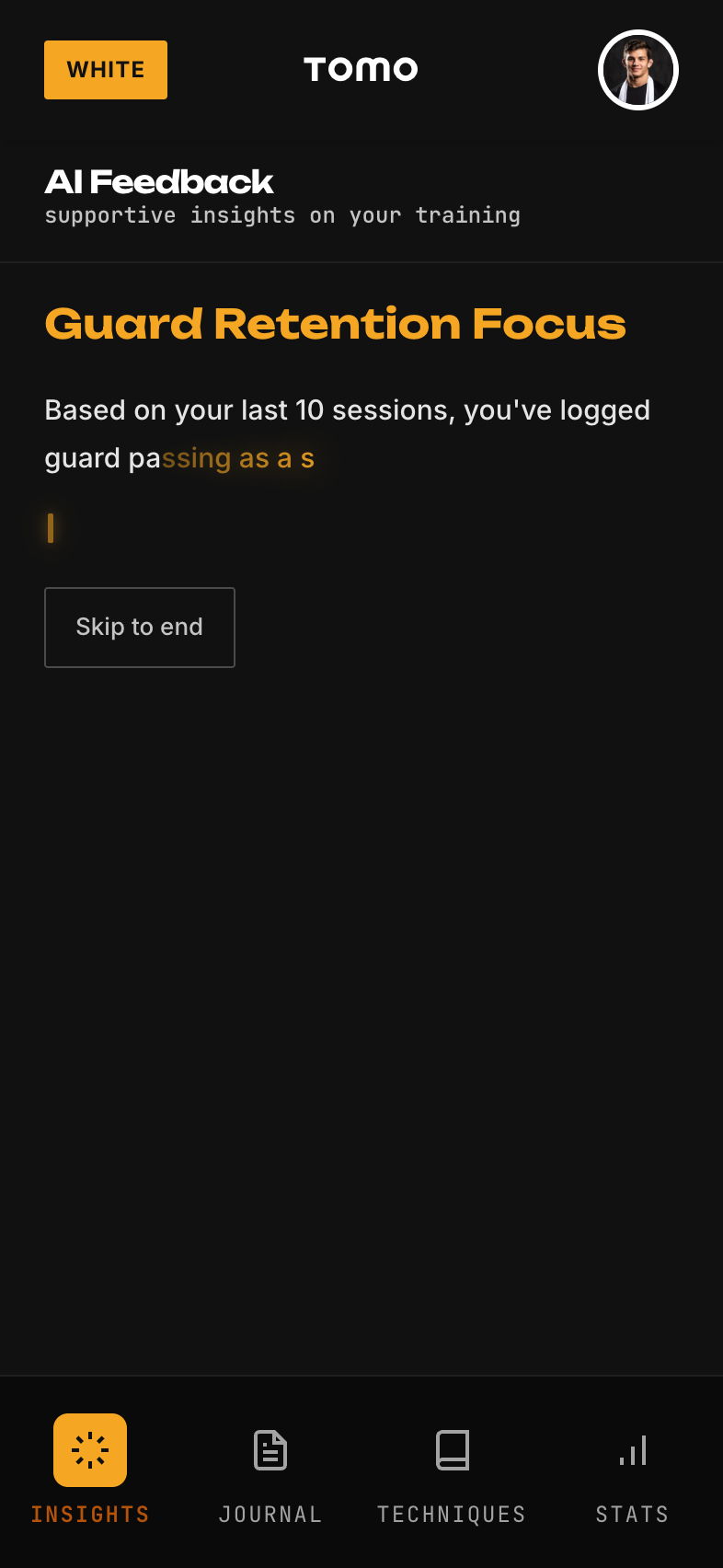

1. Belt Personalization System

The engine that makes the app feel different for each belt level. When a user logs in, this system determines what they see, how the AI talks to them, and what metrics matter.

Location: /prototype/src/config/belt-system/

Key files: belt-profiles.ts, dashboard.ts, session-logger.ts, insights.ts

Hook: useBeltPersonalization() returns profile, dashboard config, chatbot config, etc.

Usage: Import hook → access personalization data → render belt-specific UI

2. Progressive Profiling System

Instead of long onboarding forms, we ask profile questions gradually over ~20 sessions. The system tracks which questions to ask and when, and handles skipping.

Triggers: Session 3, 5, 7, 10, 12, 15, 18

Questions: Training start date, stripes, gym, goals, frequency, belt date, birth year

Skip logic: Users can skip up to 3 times per question, then we stop asking

Storage: user.progressiveProfileAnswers, user.progressiveProfileSkips

3. Risk Detection System

Monitors user behavior for signs they might quit BJJ. Tracks 11 warning signals with belt-specific weights. Can trigger interventions like encouraging messages.

Signals: Declining frequency, negative sentiment, injury mentions, long gaps, low energy, etc.

Belt multipliers: White belt signals weighted 1.5x (higher dropout risk)

Output: Risk score (0-100), triggered signals array, recommended intervention

Location: /prototype/src/config/belt-system/risk-signals.ts

4. Journal Pattern Analysis

Analyzes session notes text to detect patterns: ego challenges, breakthroughs, plateau frustration, injury concerns, etc. Powers insights and risk detection.

Categories: ego_challenge, breakthrough, plateau, injury_concern, social_positive, goal_progress, etc.

Input: session.notes text

Output: Array of detected patterns with confidence scores

Usage: Feed into insights generation and risk detection

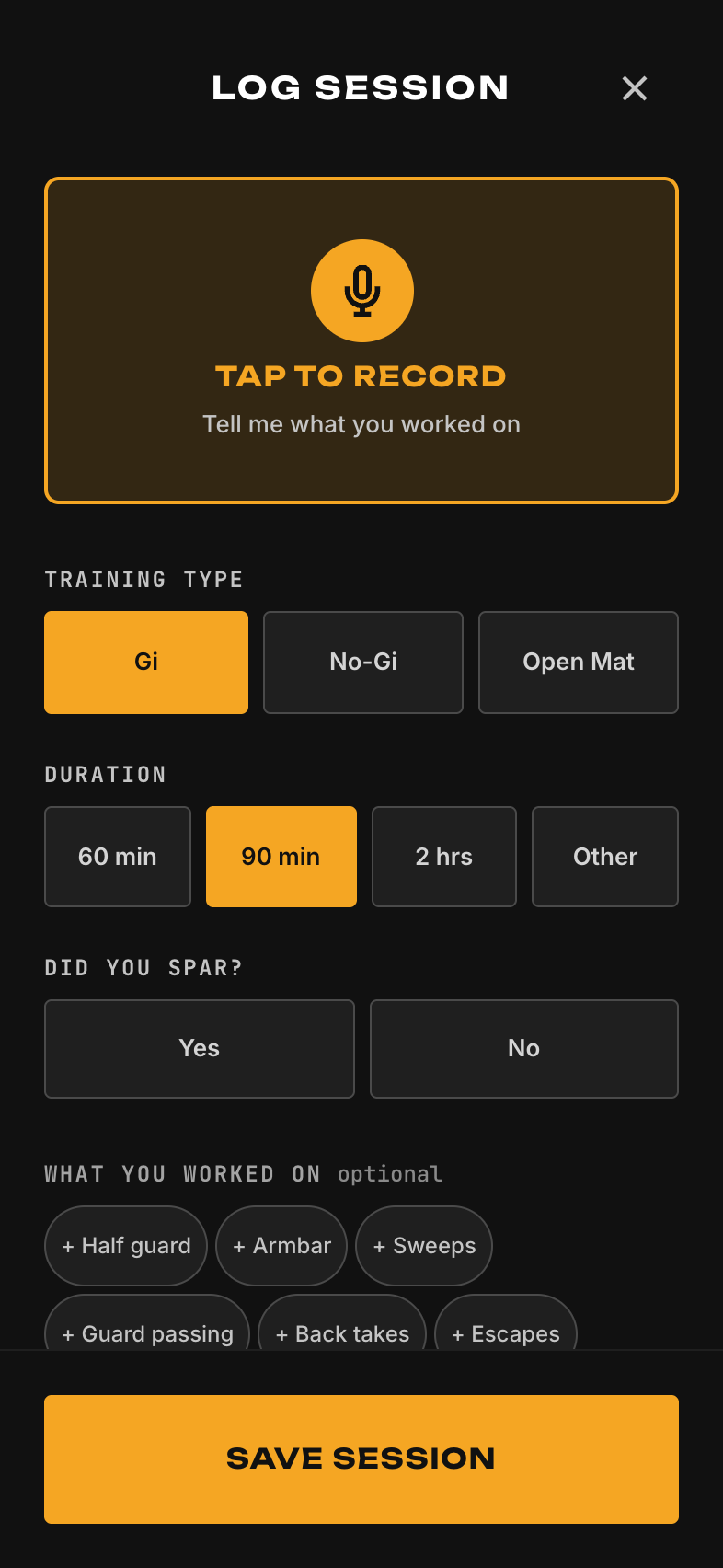

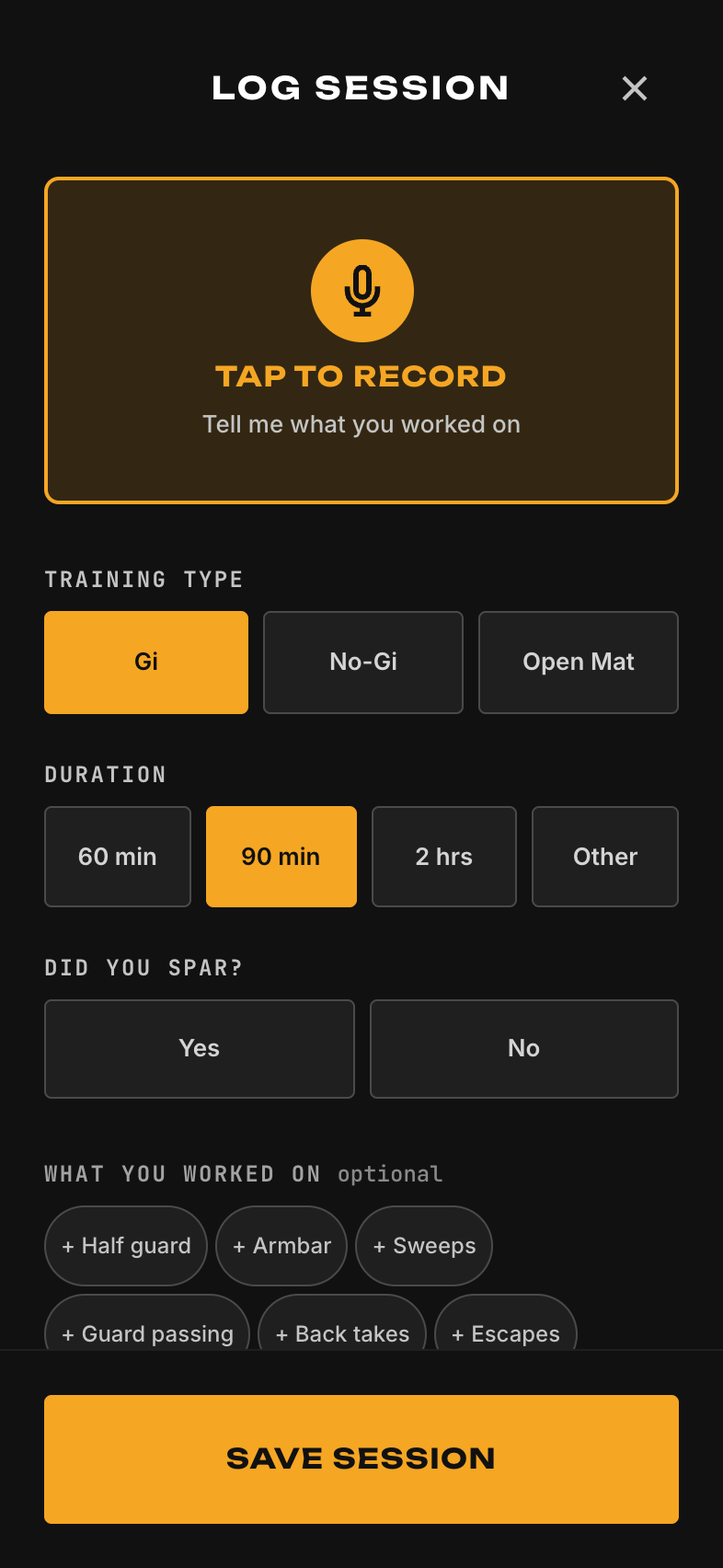

5. Smart Voice Parser

Takes raw transcribed text and extracts structured data: training type, techniques mentioned, roll outcomes, injuries, mood indicators.

Input: Raw transcription text (e.g., "Did no-gi today, got tapped twice with triangles")

Output: { trainingType: 'nogi', techniques: ['triangle'], rolls: [{ outcome: 'loss' }, { outcome: 'loss' }] }

Technique matching: Fuzzy match against techniques database

Location: /prototype/src/utils/voice-parser.ts (planned)